ScholarAgent: Making Machine Learning Research Accessible with Generative AI

Introduction

Understanding complex machine learning research papers can be daunting, especially for learners without a strong academic background. ScholarAgent bridges this gap by enabling users to ask questions about the seminal paper “Attention Is All You Need” and receive clear, conversational answers powered by Generative AI.

This blogpost explores how ScholarAgent leverages cutting-edge Gen AI capabilities to simplify dense academic content, making it accessible to students, developers, and enthusiasts alike.

ScholarAgent - https://www.kaggle.com/code/masa373dev/capstone-scholaragent

The Problem: Accessibility of Research Papers

Machine learning research papers are often written in dense, technical language, making them inaccessible to many learners. This creates a barrier to understanding foundational concepts like the Transformer architecture introduced in “Attention Is All You Need”.

How ScholarAgent Solves This Problem

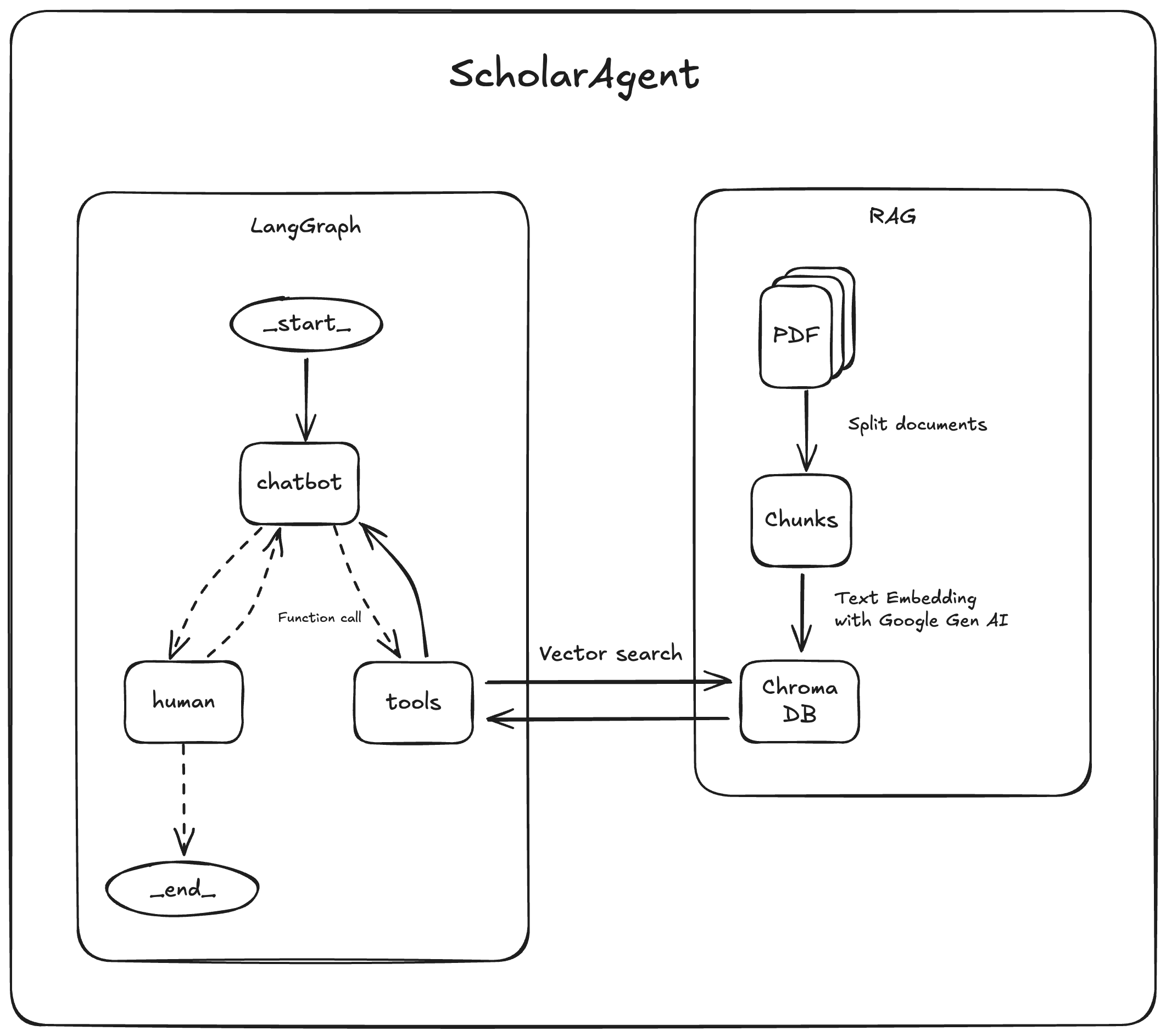

ScholarAgent uses Generative AI to break down complex content into digestible insights. By combining Retrieval-Augmented Generation (RAG), vector search, and agent orchestration, ScholarAgent provides an interactive way to explore and understand research papers.

Key Features and Implementation

1. Document Processing with Google Gen AI Embedding Model

ScholarAgent processes the research paper by embedding its content using Google's Gen AI Embedding model. The embeddings are stored in ChromaDB, a vector database optimized for fast and accurate searches.

# Embed the PDF(research paper) using Gemini and store in ChromaDB

from chromadb import Documents, EmbeddingFunction

class GeminiEmbeddingFunction(EmbeddingFunction):

def __call__(self, input: Documents):

response = client.models.embed_content(

model="models/text-embedding-004",

contents=input,

)

return [e.values for e in response.embeddings]

embed_fn = GeminiEmbeddingFunction()

# Initialize the ChromaDB client and create a collection

chroma_client = chromadb.Client()

db = chroma_client.get_or_create_collection(name=DB_NAME, embedding_function=embed_fn)

db.add(documents=chunked_documents, ids=[str(i) for i in range(len(chunked_documents))])

2. Retrieval-Augmented Generation (RAG)

When a user asks a question, ScholarAgent retrieves the most relevant chunks of the paper from ChromaDB. These chunks are then used as context for generating a natural language response.

# Query the vector database for relevant chunks

result = db.query(query_texts=["What is a Transformer?"], n_results=3)

retrieved_chunks = result["documents"]

3. Response Generation with Gemini 2.0 Flash

The retrieved context is passed to Gemini 2.0 Flash, which generates a conversational response tailored to the user's query.

# Initialize agent model with tools

llm = ChatGoogleGenerativeAI(model="gemini-2.0-flash")

llm_with_tools = llm.bind_tools([search_info])

# Generate a response using Gemini 2.0 Flash

response = llm_with_tools.invoke([retrieved_chunks])

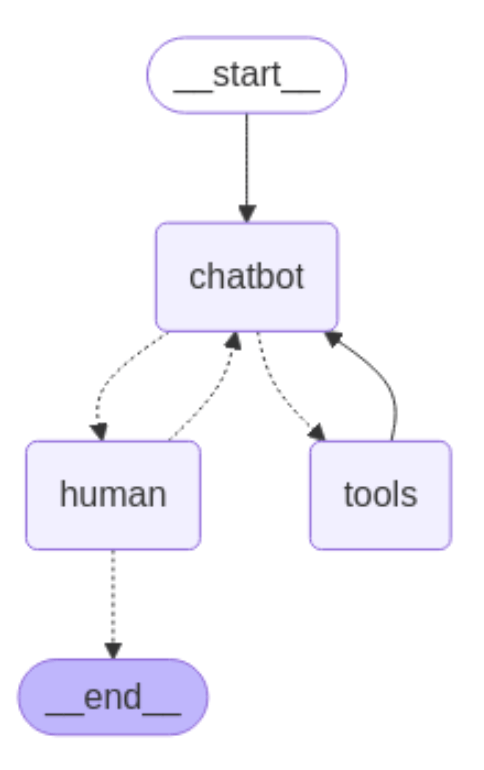

4. Agent-Orchestrated Function Calling with LangGraph

ScholarAgent uses LangGraph to orchestrate complex workflows, such as rephrasing queries or chaining multiple steps. This ensures a seamless user experience.

from langgraph.graph import StateGraph

from IPython.display import Image

graph_builder = StateGraph(BotState)

# Define the nodes

graph_builder.add_node("chatbot", chatbot_node_with_tools)

graph_builder.add_node("human", human_node)

graph_builder.add_node("tools", tool_node)

# Define the edges

graph_builder.add_conditional_edges("chatbot", maybe_route_to_tools)

graph_builder.add_conditional_edges("human", maybe_exit_human_node)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

graph_with_menu = graph_builder.compile()

Image(graph_with_menu.get_graph().draw_mermaid_png())

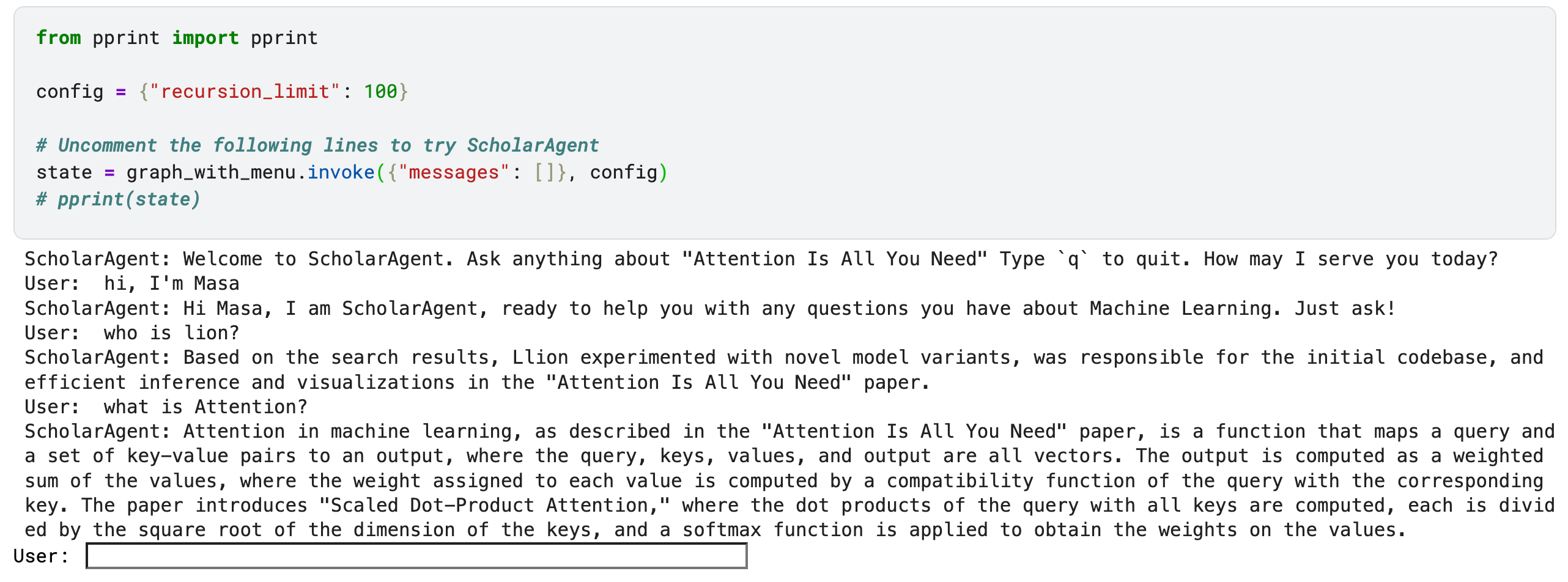

Results and Impact

ScholarAgent demonstrates the following Generative AI capabilities:

- Document Understanding: Embedding and processing dense academic content.

- RAG: Retrieving relevant information to answer user queries.

- Agent Orchestration: Managing complex workflows for enhanced interactivity.

By making research papers accessible, ScholarAgent empowers learners to explore advanced concepts like the Transformer architecture interactively.

Limitations and Future Directions

Current Limitations

- Domain-Specific Knowledge: The model's understanding is limited to the embedded content.

- Scalability: Processing large documents can be resource-intensive.

- Contextual Understanding: Responses may lack depth for highly nuanced questions.

Future Improvements

- Multi-Paper Support: Extend ScholarAgent to handle multiple research papers.

- Enhanced Contextual Awareness: Improve response quality by integrating external knowledge sources.

- Interactive Visualizations: Add diagrams and flowcharts to explain complex concepts.

Conclusion

ScholarAgent showcases how Generative AI can make advanced research accessible to a broader audience. By leveraging RAG, vector search, and agent orchestration, it transforms dense academic content into interactive, conversational insights.

Try ScholarAgent today and explore the groundbreaking concepts behind “Attention Is All You Need”!

# Launch the chatbot

state = graph_with_menu.invoke({"messages": []})

Call to Action

Have questions about the Transformer architecture? Start chatting with ScholarAgent and experience how Generative AI can simplify complex research!

ScholarAgent - https://www.kaggle.com/code/masa373dev/capstone-scholaragent